Does Technical Analysis Work? Here’s Proof! (Part 2)

Posted by Mark on April 9, 2021 at 07:04 | Last modified: March 10, 2021 13:33Today I continue with commentary and analysis of Janny Kul’s TDS article with the same title.

Kul explains p-hacking:

> If we run multiple permutations over and over and we just stop when

> we reach one that looks favourable, this lands us in a situation

> statisticians call p-hacking.

>

> Much like a series of coin tosses, there is a chance, however small,

> that we continually land on heads.

Indeed, I have now learned about how to run Bonferroni and Šidák corrections for multiple comparisons in Python.

Kul continues by saying we need a better test for comparison to avoid what could be a mirage of significance caused by multiple comparisons. One possibility is to compare with a buy-and-hold group, but:

> The problem… is that some instruments are inflationary (like Gold

> and Stocks…) and some aren’t (like USD — in an inflationary

> environment the dollar would likely depreciate).

>

> This isn’t a fair test because if a technical indicator is… right 51% of

> the time, we may be able to reasonably deduce there’s Alpha, but

> if we compare it against stock, well we’d expect stocks to be positive

> more than 51% of the time given the economy grows over time

> (historically on a daily basis the S&P 500 is +ve 55% of the time).

Kul is essentially claiming inflation to be a confounding variable (see fourth paragraph here) when looking for alpha. I don’t know that I agree. One internet source states long-term historical inflation to be ~3.2%. Regardless of the exact number, it’s positive and it happens most years. Any TA strategy that does not exceed this is not worth trading, in my opinion, regardless of whether inflation actually boosts baseline buy-and-hold performance.*

For whatever reason, stocks generally melt higher to such a degree that most long equity strategies I studied outperformed over the long-term. I believe (not yet studied) real estate melts higher. Gold seems to melt higher, but my studies did not show consistent outperformance. Contrary to Kul’s inflation hypothesis, I found oil—a commodity priced in USD—to face increased headwinds when traded long (see third paragraph here). This may be due to a particular 4-year time interval of oil prices, though: I need to look at a longer-term chart for verification.

Kul then goes on to say a better approach is the (in Python parlance) train_test_split method, which is to say use IS and OOS periods for comparison:

> [Acceptable performance would be] over 50% right in both the train

> period and test period (i.e. do both produce positive P&L) or we

> require some arbitrary threshold like 0.8x of the outperformance

> from the train period to conclude whether a particular indicator

> “works” or not.

>

> The easiest way… to test this is… to run a simulation of every

> indicator (x4) on every instrument (x10) for, say, the first 6 months

> of 2018 so that’s 40 P&L scenario’s across x3 charts. Then we take

> the top 10 best performing combinations (or we could even take all

> of the ones that have produced positive P&L) and run them for

> another 3 months then look at the performance.

I think this is all legit, but the true brilliance come next.

* — For starters, one way to study this would be to look for differences in annual stock

returns between inflationary versus deflationary years.

Does Technical Analysis Work? Here’s Proof! (Part 1)

Posted by Mark on April 6, 2021 at 07:26 | Last modified: March 9, 2021 15:09While the title may strike you as clickbait, it’s really based on an August 2019 TDS article written by Janny Kul that is (as of the time of this writing) available online. In this blog mini-series, I am going to do some analysis of the article followed by a bit of extra digging at the end that you really won’t want to miss: stay tuned.

Also in 2019, I wrote a blog mini-series on the same topic where I presented and commented on a sampling of others’ beliefs about technical analysis (TA). This is more of the same except I will be focusing solely on the Janny Kul article. What I particularly like about the article pertains to this fourth paragraph: Kul gives us supporting data, which I find comparable to some of my studies described here.

I will go through Kul’s article quoting and commenting on different parts. I strongly encourage you to find the whole article online for a very interesting read.

> Given computing power nowadays, in a matter of moments we can simply

> test every possible indicator (~27), across every standard timeframe

> (~15), across every possible tradable instrument (>100,000).

This would be 27 * 15 * 100,000 = 40.5M strategies. What kind of computer does Kul have access to that can do this in a “matter of moments?!” Backtesting over 8 – 12 years, my computer was taking 20+ minutes to backtest a double-digit number of strategies. If I round up to 100 and only consider 40M strategies, then it would take 400,000 times as long to run Kul’s backtest on my computer, which is ~15 years. A computer 1,000 times faster than mine would [only] take ~6 days. I have been thinking of upgrading so…

I would question whether it’s reasonable to undertake a backtest of that complexity. Even at that, he’s talking about applying TA “strictly how the indicators were intended to be used.” Not only do many people not believe those settings to be the best,* I strongly believe it important to explore the surrounding parameter space as I describe in paragraphs 4-5 here. This is more what I was doing in my studies where I had up to double digit iterations. This turns the 40.5M strategies into 4.5 billion or many, many more because even 100 iterations is small compared to the finer parameter grids most strategy developers seem to use. I discuss this in paragraphs 2-3 here and the last paragraph here.

I therefore disagree with Kul in his assessment of how simple a complete analysis of TA can be.

Nevertheless, Kul does present some actual data in the article and I will get to studying that next time.

* — Many comments imply this if you read closely in Part 2, Part 3, and Part 4;

only the reply in Part 6 suggests simple strategies can actually work.

Automated Backtester Research Plan (Appendix B)

Posted by Mark on November 3, 2020 at 07:44 | Last modified: May 12, 2020 14:33From Jan 2019, this should be the final entry of the current blog mini-series (see Appendix A introductory remarks).

—————————

More relevant to the WF approach, could we shorten the period to X months and look at the average and extremes of the distribution of daily price changes? We would be stopped out going into a big change. When placing trades in the new market environment placing trades, we should get compensated by higher option premiums. This gives us a fighting chance and if we can somehow decrease position size in accordance with ATR, then maybe we can keep the average PnL changes somewhat constant across anything from calm to volatile market environments. Stuff to think about.

I don’t believe this holds any relevance with regard to the daily/serial trades categorization (see Part 5 paragraphs 2-3). This may be something to study with regard to allocation or it may be something to monitor before trade entry. A study of ATR versus future MAE may help to determine what relationship (if any) exists between the two.

If the market goes up more than it goes down, then why not trade bullish butterflies rather than naked puts? Position sizing aside, the risk is much lower for the butterflies.

My initial thought on this was to study MAE and MFE of the underlying over the next ~30 days. Mild MAE numbers and better MFE would signify a market moving gradually higher. The key for butterfly profitability is when the market gets inside the expiration tent, which would not be indicated by this study. If we target something like 10%/-20% PT/max loss, though, then any movement up will likely get close to the PT unless the market skyrockets.

We could also study these max excursion distributions over different time intervals (e.g. 5-20 days by increment of 5). The butterfly would be indicated if MAE remains constant at some point while MFE continues to grow. Another indication would be a high percentage of cases showing MAE to be contained while MFE is large. Another indication would be MFE limited and positive within a certain time range. A final indication would be tip of bell curve corresponding to a set range. We could study butterflies that are centered X% above the money to X% below the money and calculate differences in profit.

We could also look at the asymmetrical butterfly (butterfly + PCS), but spread width would have to be specified, etc.

As backdrop from the easier scenario against which to compare all recent discussion (this blog mini-series), consider an all-equity portfolio where backtesting can easily be done with fixed position size throughout as defined by:

Number of shares traded = (initial number shares * initial stock price) / current stock price

Normalized drawdown can then be calculated at any point as a percentage of the initial account value.

Categories: Backtesting | Comments (0) | PermalinkAutomated Backtester Research Plan (Appendix A)

Posted by Mark on October 29, 2020 at 07:19 | Last modified: May 12, 2020 12:10I’ve been getting more organized this year by converting incomplete drafts into finished blog posts. I thought I had wrapped up the automated backtester mini-series here, but I was wrong! I have one more draft with research notes on potential future directions. On the off chance someone out there can possibly benefit, from Jan 2019 I present the next two posts.

—————————

With regard to iron condors (split-strike butterflies), maybe we set short strikes at 10-40 delta by increments of 10 and figure out what the wing widths are going to be. Maybe we close an offended vertical if short delta increases by X or if trade gets down 6% when using profit/loss targets of 10%/-15% or so. Maybe we have to calculate max potential profit and look to collect 50-90% of that as early management (or closing at 7-21 DTE by increments of 7).

From the previous post on vertical spreads we could go in a couple different directions. I talked about one specific trading plan called “The Bull.” I would like to backtest a few other particular trades (i.e. Netzero, STT, RC). I could give some particulars about those trades or maybe the backtesting plan just like I did for The Bull.

Finally, and many of these could be posted under “additional considerations” (along with many of my non-automated-backtester backtesting ideas), I have to face the possibility that all of this is done in vein. We’re looking at historical data and possibly setting critical levels that only get breached in 5% of cases. This may make me feel more comfortable when taking action at these points, but it certainly is no guarantee they will be effective in case we are in a longer-term period where distribution on that parameter changes. To that end, it would be nice if I could somehow split the data and do some WFA but I fear the sample size may be too small (see third paragraph here).

This concern even applies to something as general as daily option-price changes. Looking over the whole data span, option prices generally only change by $X/day. When IV picks up and backwardation occurs with huge ATRs, though, those daily price changes are going to be magnified—possibly 5-10X or more. Clearly this indicates a time to step out but what if such volatile activity persists for a periodi of years? This trading approach might be on the sidelines for the duration.

I will conclude next time.

Categories: Backtesting | Comments (0) | PermalinkBullish Iron Butterflies (Appendix)

Posted by Mark on September 28, 2020 at 06:54 | Last modified: May 8, 2020 10:19I’ve been going through my “drafts” folder this year trying to finish partially-written blog posts and get more organized. This post began an eight-part mini-series on bullish iron butterflies (IBF). My drafts folder contained one additional post containing miscellaneous thoughts about the trade and development. In the longshot case that someone out there could possibly benefit from any of this, here is that post from Sep 2017.

—————————

With the bullish IBF, I have found profit factor to be much lower and complicated backtesting with OptionVue. What about profit per commission?

Intuitively, I feel the market moves around in gross points more than proportionately to the underlying value. Does ATR truly capture this? If not, then perhaps fixed width rather than a dynamic butterfly is the way to go. In that case, I should just repeat my backtesting with different widths and see what works best. That would also allow for easier MAE analysis, which I might be finding right now is impossible due to varying widths.

I thought the bullish butterfly would perform better because of the general upward bias to equities over the last decade. I’d like to compare this to an ATM butterfly.

Other future directions include:

- Break down PnL by width

- MAE distribution

- Slippage analysis

- Break down PnL by Osc

- Categorize by Average IV

- Study effect of time stop

- Histogram of days in trade for winners (earlier exit, lower

potential loss since T+0 maintains height over max loss)

The preliminary trade statistics did not record trade price, which I’m guessing is lower for the symmetric than the bullish IBF.

I haven’t dealt much with the differential margin requirement on each trade. What about widening the narrow structures in case that’s just leftover margin anyway?

Is there an issue with profit contribution when trading width-adjusted butterflies? The wider structures contribute more profit than the narrow ones. The narrow ones lose less because they are more diluted but they also return less in terms of ROI (in addition to gross).

Categories: Backtesting | Comments (0) | PermalinkWorst of Naked Puts for 2020 (Part 2)

Posted by Mark on July 28, 2020 at 06:44 | Last modified: July 12, 2021 12:41Last time, I presented some manual backtesting in OptionNet Explorer selling the most inopportune monthly naked puts ~120 DTE in Feb 2020. Today I continue with more analysis, which actually takes the form of an e-mail sent to a colleague (and will therefore remain largely unchanged).

I do think this is cherry-picking the absolute worst, but you think even the absolute worst should be heeded because the market will eventually find it. Had you started a few weeks earlier or been running this campaign for several months, you would be net long puts and perhaps completely fine.

What comes next, though?

In 30 days, the market is down 950 points, which is 28%. Certainly once IV has spiked, the losses on subsequent NPs won’t be as big—but still significant. I once sold some NPs with a 3x SL. The worst in the current backtest is down 75x. That’s a wide swath for tremendous devastation: 65x, 55x, 45x, 35x, 25x, 15x, 5x, etc. Anything sold during this time is going to lose big money, which you somehow have to manage.

If you stayed out and sold no new NPs (trade guidelines needed), then when would you get back in?

And once you get back in, it’s going to take some time for those to mature and get converted to net long puts. Only then can you start placing income structures. Won’t you be starting back again at just one contract, no matter how large your account had grown and what the average annual monthly target is? Maybe it would be one tranche and the number of contracts per tranche would grow as your account grows. I’m not sure, but in any case it would be one when you’re used to having on several. What would be your guidelines to “resume” the portfolio?

On the plus side, any income structures placed in March would be in higher-IV conditions thus more resilient anyway. Might these not need a tail hedge (or would they automatically include one per the dynamic-sizer spreadsheet)? Actually, that’s a whole different structure than the tail hedge; let’s not mix multiple trading plans at once.

I can imagine a rudimentary trend-following strategy keeping you out of much of the downdraft, but I still have the same concern as explained two paragraphs above. The factory gets disrupted if you’re out for much longer than it usually takes to generate new hedges. You will eventually go from a steady state average to a lower number of hedges, which somehow means you have to decrease position size of otherwise unprotected income generators.

Maybe this is a “first-world problem” because it merely implies lower profit. The opportunity cost concern is exactly what leads some traders to size too large, though, when can later result in catastrophic loss.

Categories: Backtesting | Comments (0) | PermalinkWorst of Naked Puts for 2020 (Part 1)

Posted by Mark on July 23, 2020 at 17:12 | Last modified: July 12, 2021 12:01I’ve been revisiting the idea of naked puts (NP) and did some backtesting of Feb-Mar 2020, which is when the most recent market crash hit hardest.

I actually composed this as an e-mail to someone and I’m going to leave it largely unchanged. Trade management dictates rolling down and out with unspecified parameters. Being unspecified, I just allude to the roll in a general fashion.

Here’s the worst for 2020: 12 Jun 2425 NPs sold for $4.30 on 2/19/20 (121 DTE) with SPX at 3387.41 and Avg IV 11.49.

On 2/24/20, with SPX at 3229.63 (Avg IV 21.78) the NP is $11.05. Would you roll with the strike at 2425? If so, then you could roll down 75 points and out one month for a credit. Thereafter, you can’t roll down more than 50 points for a credit.

On 3/16/20 (95 DTE), NP is ITM and $317.90 with SPX 2409.18 (Avg IV 78.49). For a credit, you can roll out one month and down 25 points. You could also roll out two months and down 50 points for a credit.

On 3/18/20 (93 DTE), NP is ITM and $326.90 with SPX 2382.30 (Avg IV 74.65). For a credit, you can roll out one month and down 25 points. You could also roll out two months and down 75 points for a credit. It’s hard to imagine execution, though. OI exists, but the options are very expensive. In normal markets, $0.05 slippage on $5.00 is 1%. Here, 1% is ~$3.25. Compound that with market crash conditions…

On 3/19/20 (92 DTE), NP is ITM and $275.90 with SPX 2409.63 (Avg IV 68.98). For a credit, you can roll out one month and down 50 points. You could also roll out two months and down 75 points for a credit (not with the lousy execution, though).

On 3/20/20 (91 DTE), NP is ITM and $293.25 with SPX 2309.66 (Avg IV 62.48). For a credit, you can roll out one month and down 25 points. You could also roll out two months and down 75 points for a credit (not with the lousy execution, though).

On 3/23/20 (88 DTE), NP is ITM and $285.55 with SPX 2253.66 (Avg IV 60.94). For a credit, you can roll out one month and down 25 points. You could also roll out two months and down 50 points for a credit (not with the lousy execution, though).

On 4/1/20 (79 DTE), NP is ITM and $193.05 with SPX 2472.64 (Avg IV 56.08). For a credit, you can roll out one month and down 50 points. You could also roll out two months and down 100 points for a credit (not with the lousy execution, though).

It gets better after that, but any of these losses are horrendous. Even 4/1/20 is 44x the initial credit, and this doesn’t even account for the fear level of trying to roll in these market conditions. On 3/18/20, the original 12 NPs would be down $387K.

I will continue next time.

Categories: Backtesting | Comments (0) | PermalinkAutomated Backtester Research Plan (Part 10)

Posted by Mark on May 14, 2020 at 07:24 | Last modified: May 12, 2020 11:19I’ve been getting more organized this year by converting incomplete drafts into finished blog posts. From the mini-series on the automated backtester, here is one further post (Jan 2019) on the off chance that someone out there could possibly benefit.

—————————

Relatively simple adjustment criteria to study: rolling out a short option or closing a losing vertical. Maybe also rolling a wing closer when the market is up X% or when the market reaches the opposite long strike (because it has become cheaper to roll).

The next step will be to tabulate several statistics for the serial, non-overlapping approach. These include number (and temporal distribution) of trades, winning percentage, compound annualized growth rate (CAGR), maximum drawdown percentage, average days in trade, PnL per day, risk-adjusted return (RAR), and profit factor (PF). Equity curves will represent just one potential sequence of trades and some consideration could be given to Monte Carlo simulation. We can plot equity curves for different account allocations such as 10% to 50% initial account value by increments of 5% or 10%.

Allocation may be done based on maximum risk of the position (wing width minus credit received for iron spreads) or stop-loss at max potential profit. When considering this, it would be interesting to see what the distribution of losers looks like as a percentage of max potential profit (credit received, for an iron butterfly).

RAR may have two meanings. As used above, RAR is CAGR (or total return) divided by maximum drawdown percentage. When studied with filters, RAR may mean CAGR (or total return) divided by percentage of time in the market.

Some elementary filters can also be studied. Average true range (ATR) (eight or 14 periods) under a certain value (analyzed as a range) could be an entry criterion. Determining the exact range could be done by studying ATR distribution by date across the whole data set. Maybe we use absolute number or maybe we use a percentile over the last X days, which may have to be optimized as well. We could also study VIX (or RVX) to see if it makes sense to stay out with VIX above Y or above the Z’th percentile over the last W days. A more complicated combination would be to center the trade above (below) the money when momentum is positive (negative) or when we get a Donchian channel breakout to the upside (downside). This may be more a study of mean reversion vs. trend following and probably something to study closely with stability in mind.

Categories: Backtesting | Comments (0) | PermalinkButterfly Backtesting Ideas (Part 2)

Posted by Mark on May 11, 2020 at 08:23 | Last modified: May 8, 2020 07:24I’ve been going through my “drafts” folder this year trying to finish partially-written blog posts and get more organized. Here is a one-off post whose sequel simply did not get completed. As I have said before, in the longshot case that someone out there could possibly benefit from any of this, what follows is Part 2 from June 15, 2017.

—————————

Everywhere I look people seem to be doing butterflies of some sort so I’m really interested to find the attraction.

I would like to do a backtest of the flat iron butterfly but before that I’d like to do a typical, symmetric butterfly. I’d like to place this above the money because of all the talk of doing the asymmetric in order to balance NPD, which is normally negative at inception on an ATM symmetric butterfly due to vertical index skew. Rather than take on a boatload of additional risk in the form of asymmetric wings (embedded vertical spread), centering the trade above the money will offset natural negative delta at trade inception.

How far above the money?

If I’m looking at 10-point RUT strikes then going below the money could be 1-10 points, which is 0.25% to 2.5% with RUT at 400 or 0.071% to 0.71% for RUT at 1400. The former is much larger but if the average move (ATR?) is much smaller in case of the former (it should be) then everything should be fine.

One thought that comes to mind is that IV might tell me whether we’re in a relatively volatile (high ATR) or channeling (low ATR) market, but it’s possible that all incidents of RUT < 400 are relatively volatile whereas higher prices may lack volatility on a relative basis. This is true because of the limited sample size of trading days < 400. I should do a study of # days with RUT price between certain ranges (maybe look every 100 points) and check average IV and IV SD.

How wide should my wings be?

Part of me would like to take a fixed wing width and use it throughout. The problem I see is that the percentage will differ greatly if the index is at 400 vs. 1400. This is an argument for using a constant percentage. Of course, I can take whatever width and multiply by the appropriate number of contracts to somewhat normalize margin requirement (MR). My concern here is that a fixed width might be relatively wide with respect to ATR for some butterflies and relatively narrow for others. In other words, if a fixed width ends up being a minimal move in some markets vs. a huge move in others, then maybe I should use another indicator to tell me what width best fits a certain market and then adjusting total risk (MR) by adjusting position size.

Implied Volatility Spikes

Posted by Mark on August 27, 2019 at 07:16 | Last modified: May 14, 2020 11:14One of my projects next year will be to clear out my drafts folder. Most of these entries are rough drafts or ideas for blog posts. This is one of 40+ drafts in the folder: a study on incidence of IV increase.

For equity trend-following (mean-reversion) traders, IV spike is a potential trigger to get short (long).

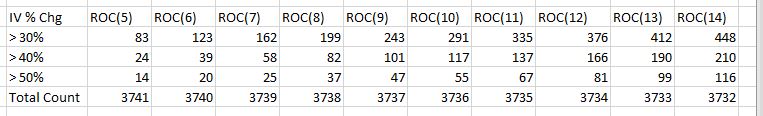

This was a spreadsheet study I did in December 2016. I looked at RUT IV from 1/2/2002 – 11/17/2016. I calculated the number of occurrences IV increased by 30% or more, 40% or more, and 50% or more over the previous 5 – 14 trading days.

Here are the raw data:

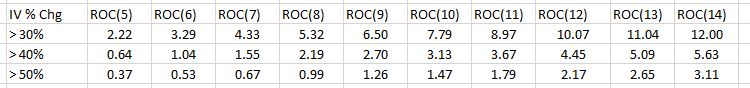

Here are the percentages:

If I’m going to test a trade trigger, then I would prefer to find one with a large sample size of occurrences. Vendors are notorious for the fallacy of the well-chosen example (second-to-last paragraph here). This is a chart perfectly suited for the strategy, system, or whatever else they are trying to sell. When professionally presented, it looks wondrous; little do we know it represents a small sample size and is something that has rarely come to pass.

This trigger may avoid the small sample size categorization. Even in the >50% line (first table), periods of 8 – 14 show at least 30 occurrences. Some people regard 30 or more as constituting a sufficiently large sample size. I think length of time interval is relevant, too. We have roughly 15 years of data here. 30 occurrences is about twice per year. If I want four or more occurrences per year, then perhaps I look to >40% (period at least eight) or >30% as a trigger.

With regard to percentages, my mind goes straight to the 95% level of significance. Any trigger that occurs <5% of the time represents a significant event. I still don’t want too few occurrences, though. 1.5 standard deviations encompasses 87% of the population so maybe something that occurs less than 13% of the time or ~6.5% of the time (one-tailed) could be targeted.

Another consideration would be to look at the temporal distribution of these triggers. Ideally, I would like to see a smooth distribution with triggers spread evenly over time (e.g. every X months). A lumpy distribution where triggers are clustered around a handful of dates may be more reflective of the dreaded small sample size.

The next step for this research would be to study what happens when these triggers occur. Once the dependent variable is selected, we have enough data here to examine the surrounding parameter space (see previous link).

Categories: Backtesting | Comments (0) | Permalink